|

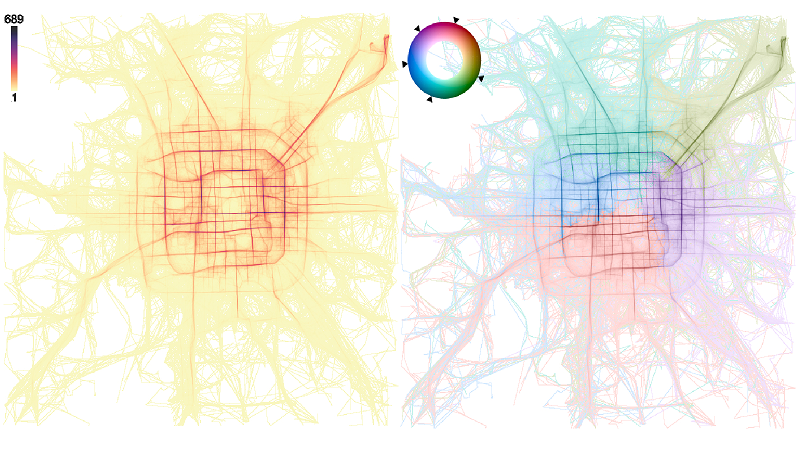

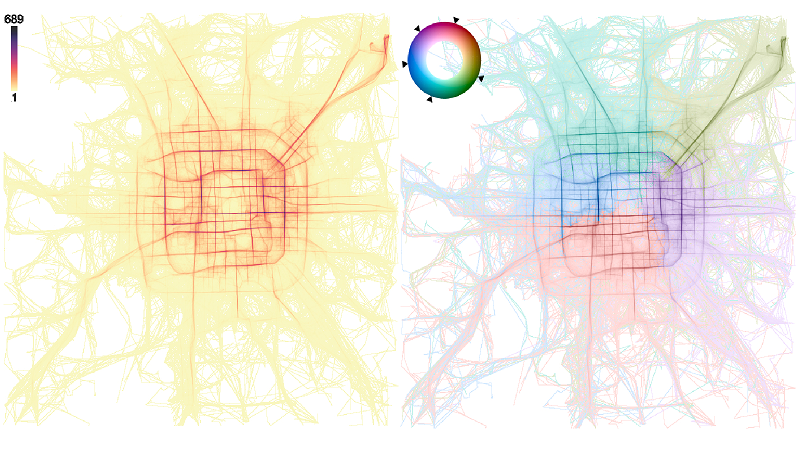

Reducing Ambiguities in Line-based Density Plots by Image-space Colorization

(IEEE Vis 2023)

Yumeng Xue, Patrick Paetzold, Rebecca Kehlbeck, Bin Chen, Kin Chung Kwan, Yunhai Wang, and Oliver Deussen

Abstract

Line-based density plots are used to reduce visual clutter in line charts with a multitude of individual lines. However, these traditional density plots are often perceived ambiguously, which obstructs the user's identification of underlying trends in complex datasets. Thus, we propose a novel image space coloring method for line-based density plots that enhances their interpretability. Our method employs color not only to visually communicate data density but also to highlight similar regions in the plot, allowing users to identify and distinguish trends easily. We achieve this by performing hierarchical clustering based on the lines passing through each region and mapping the identified clusters to the hue circle using circular MDS. Additionally, we propose a heuristic approach to assign each line to the most probable cluster, enabling users to analyze density and individual lines. We motivate our method by conducting a small-scale user study, demonstrating the effectiveness of our method using synthetic and real-world datasets, and providing an interactive online tool for generating colored line-based density plots.

|

|

Autocomplete Repetitive Stroking with Image Guidance

(SIGGRAPH Asia 2021 Technical Communications)

Yilan Chen,

Kin Chung Kwan,

Li-Yi Wei, Hongbo Fu

Abstract

Image-guided drawing can compensate for the lack of skills but often requires a significant number of repetitive strokes to create textures. Existing automatic stroke synthesis methods are usually limited to predefined styles or require indirect manipulation that may break the spontaneous flow of drawing. We present a method to autocomplete repetitive short strokes during users’ normal drawing process. Users can draw over a reference image as usual. At the same time, our system silently analyzes the input strokes and the reference to infer strokes that follow users’ input style when certain repetition is detected. Our key idea is to jointly analyze image regions and operation history for detecting and predicting repetitions. The proposed system can reduce tedious repetitive inputs while being fully under user control.

|

|

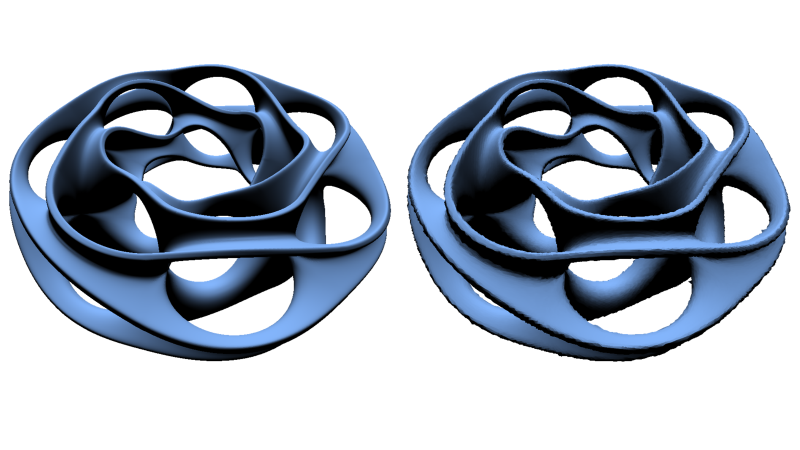

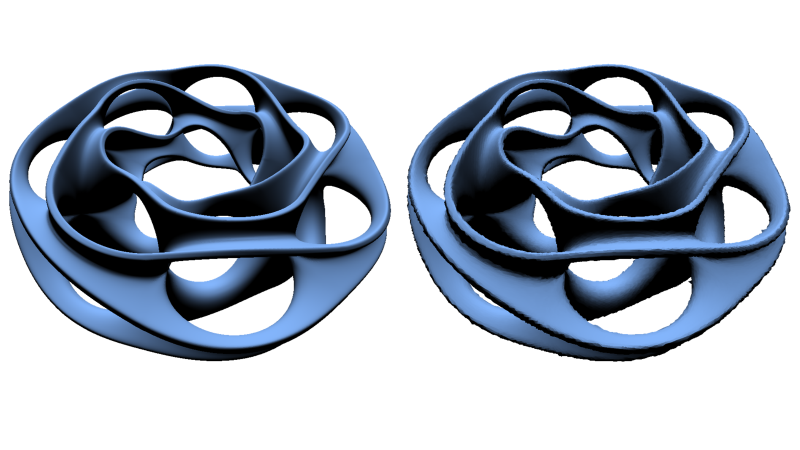

Multi-Class Inverted Stippling

(SIGGRAPH Asia 2021)

Christoph Schulz,

Kin Chung Kwan (Joint first author),

Michael Becher, Daniel Baumgartner, Guido Reina, Oliver Deussen, and Daniel Weiskopf

Abstract

We introduce inverted stippling, a method to mimic an inversion technique used by artists when performing stippling. To this end, we extend LindeBuzo-Gray (LBG) stippling to multi-class LBG (MLBG) stippling with multiple layers. MLBG stippling couples the layers stochastically to optimize for per-layer and overall blue-noise properties. We propose a stipple-based filling method to generate solid color backgrounds for inverting areas. Our experiments demonstrate the effectiveness of MLBG in terms of reducing overlapping and intensity accuracy. In addition, we showcase MLBG with color stippling and dynamic multi-class blue-noise sampling, which is possible due to its support for temporal coherence.

|

|

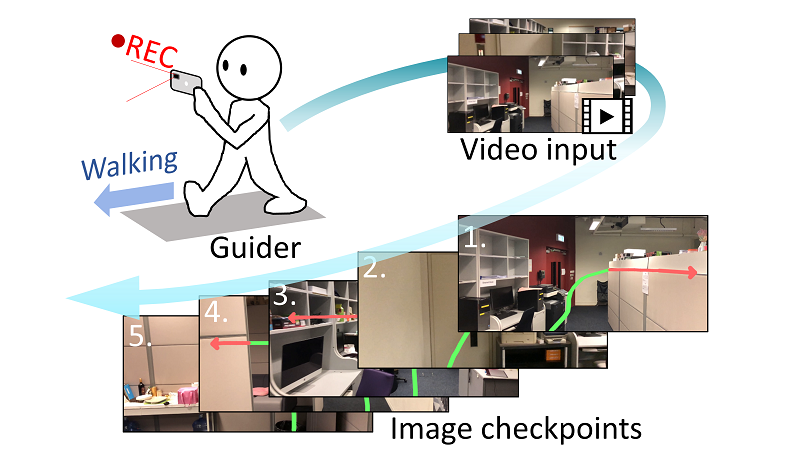

Automatic Image Checkpoint Selection for Guider-Follower Pedestrian Navigation

(CGF 2020)

Kin Chung Kwan, and Hongbo Fu

Abstract

In recent years guider-follower approaches show a promising solution to the challenging problem of last-mile or indoor pedestrian navigation without micro-maps or indoor floor plans for path planning. However, the success of such guider-follower approaches is highly dependent on a set of manually and carefully chosen image or video checkpoints. This selection process is tedious and error-prone. To address this issue, we first conduct a pilot study to understand how users as guiders select critical checkpoints from a video recorded while walking along a route, leading to a set of criteria for automatic checkpoint selection. By using these criteria, including visibility, stairs, and clearness, we then implement this automation process. The key behind our technique is a lightweight, effective algorithm using left-hand-side and right-hand-side objects for path occlusion detection, which benefits both automatic checkpoint selection and occlusion-aware path annotation on selected image checkpoints. Our experimental results show that our automatic checkpoint selection method works well in different navigation scenarios. The quality of automatically selected checkpoints is comparable to that of manually selected ones and higher than that of checkpoints by alternative automatic methods.

|

|

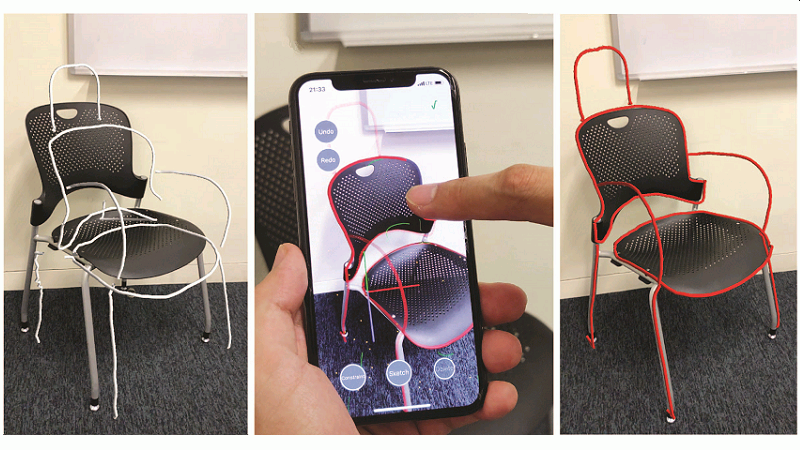

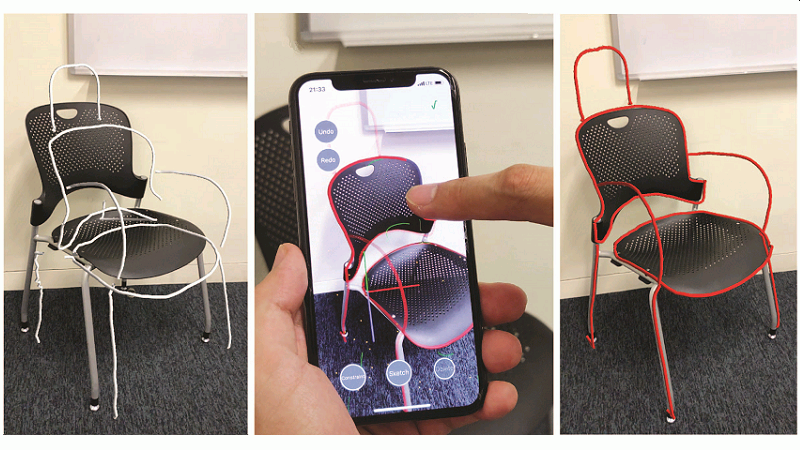

3D Curve Creation on and around Physical Objects with Mobile AR

(TVCG 2020; presented in IEEE VR 2021)

Hui Ye, Kin Chung Kwan, and Hongbo Fu

Abstract

The recent advance in motion tracking (e.g., Visual Inertial Odometry) allows the use of a mobile phone as a 3D pen, thus significantly benefiting various mobile Augmented Reality (AR) applications based on 3D curve creation. However, when creating 3D curves on and around physical objects with mobile AR, tracking might be less robust or even lost due to camera occlusion or textureless scenes. This motivates us to study how to achieve natural interaction with minimum tracking errors during close interaction between a mobile phone and physical objects. To this end, we contribute an elicitation study on input point and phone grip, and a quantitative study on tracking errors. Based on the results, we present a system for direct 3D drawing with an AR-enabled mobile phone as a 3D pen, and interactive correction of 3D curves with tracking errors in mobile AR. We demonstrate the usefulness and effectiveness of our system for two applications: in-situ 3D drawing, and direct 3D measurement.

|

|

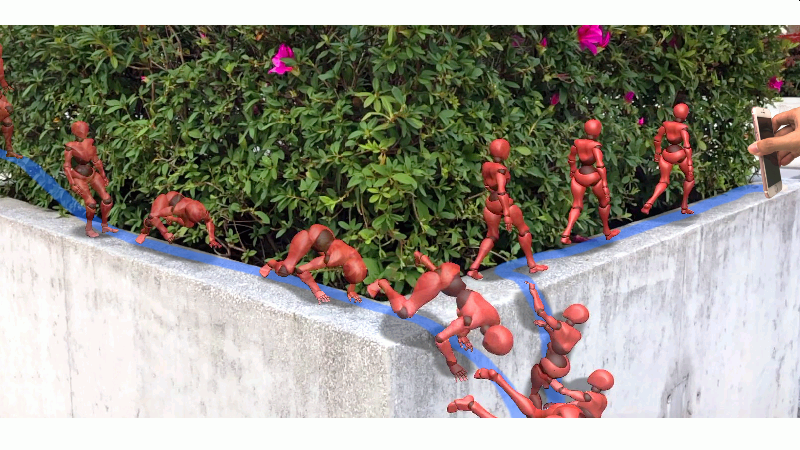

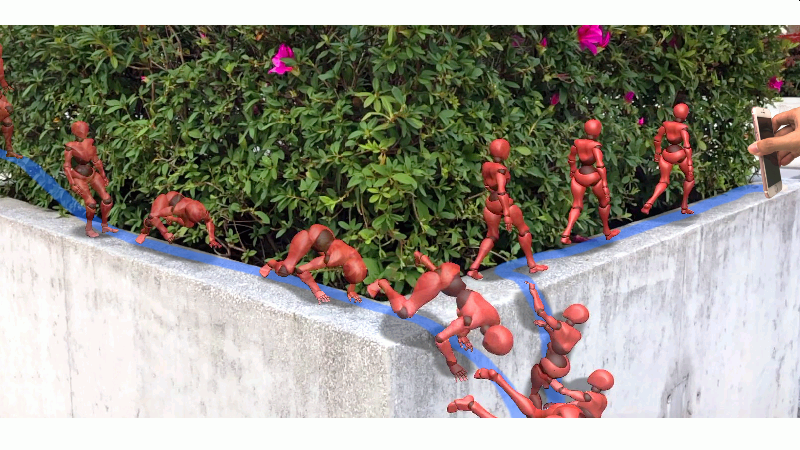

ARAnimator: In-situ Character Animation in Mobile AR with User-defined Motion Gestures

(SIGGRAPH 2020)

Hui Ye, Kin Chung Kwan (Joint first author), Wanchao Su, and Hongbo Fu

Abstract

Creating animated virtual AR characters closely interacting with real environments is interesting but difficult. Existing systems adopt video seethrough approaches to indirectly control a virtual character in mobile AR, making close interaction with real environments not intuitive. In this work we use an AR-enabled mobile device to directly control the position and motion of a virtual character situated in a real environment. We conduct two guessability studies to elicit user-defined motions of a virtual character interacting with real environments, and a set of user-defined motion gestures describing specific character motions. We found that an SVM-based learning approach achieves reasonably high accuracy for gesture classification from the motion data of a mobile device. We present ARAnimator, which allows novice and casual animation users to directly represent a virtual character by an AR-enabled mobile phone and control its animation in AR scenes using motion gestures of the device, followed by animation preview and interactive editing through a video see-through interface. Our experimental results show that with ARAnimator, users are able to easily create in-situ character animations closely interacting with different real environments.

|

|

Mobi3DSketch: 3D Sketching in Mobile AR

(CHI 2019)

Kin Chung Kwan, and Hongbo Fu

Abstract

Mid-air 3D sketching has been mainly explored in Virtual Reality (VR) and typically requires special hardware for motion capture and immersive, stereoscopic displays. The recently developed motion tracking algorithms allow real-time tracking of mobile devices, and have enabled a few mobile applications for 3D sketching in Augmented Reality (AR). However, they are more suitable for making simple drawings only, since they do not consider special challenges with mobile AR 3D sketching, including the lack of stereo display, narrow field of view, and the coupling of 2D input, 3D input and display. To address these issues, we present Mobi3DSketch, which integrates multiple sources of inputs with tools, mainly different versions of 3D snapping and planar/curves surface proxies. Our multimodal interface supports both absolute and relative drawing, allowing easy creation of 3D concept designs in situ. The effectiveness and expressiveness of Mobi3DSketch are demonstrated via a pilot study.

|

|

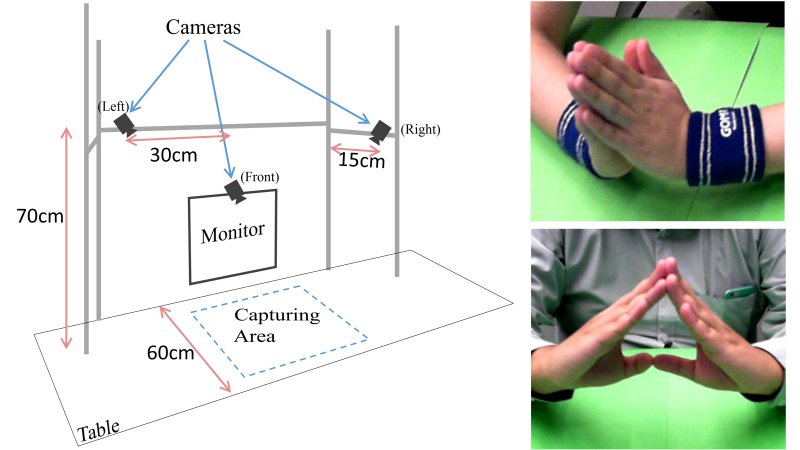

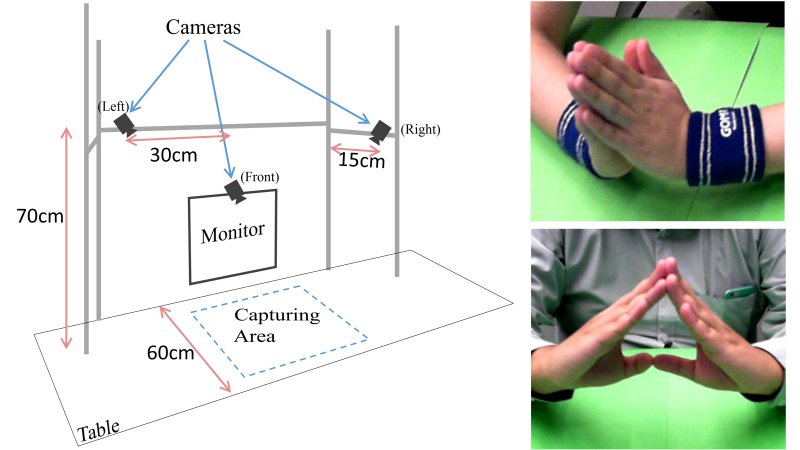

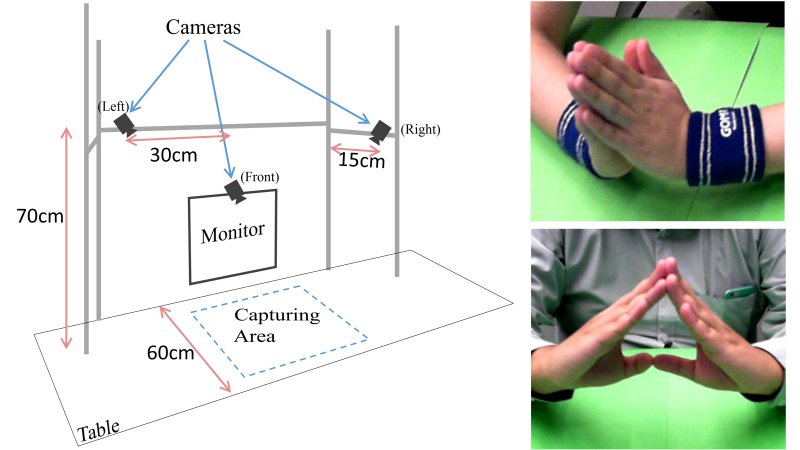

Occlusion-robust Bimanual Gesture Recognition by Fusing Multi-views

(Multimedia Tools and Applications 2019)

Geoffrey Poon, Kin Chung Kwan, and Wai-Man Pang

Abstract

Human hands are dexterous and always be an intuitive way to instruct or communicate with peers. In recent years, hand gesture is widely used as a novel way for human computer interaction as well. However, existing approaches target solely to recognize single-handed gesture, but not gestures with two hands in close proximity (bimanual gesture). Thus, this paper tries to tackle the problems in bimanual gestures recognition which are not well studied from the literature. To overcome the critical issue of hand-hand self-occlusion problem in bimanual gestures, multiple cameras from different view points are used. A tailored multi-camera system is constructed to acquire multi-views bimanual gesture data. By employing both shape and color features, classifiers are trained with our bimanual gestures dataset. A weighted sum fusion scheme is employed to ensemble results predicted from different classifiers. While, the weightings in the fusion are optimized according to how well the recognition performed on a particular view. Our experiments show that multiple-view results outperform single-view results. The proposed method is especially suitable to interactive multimedia applications, such as our two demo programs: a video game and a sign language learner.

|

|

Real-time Multi-view Bimanual Gesture Recognition

(IEEE ICSIP 2018)

Geoffrey Poon, Kin Chung Kwan, and Wai-Man Pang

Abstract

This paper presents a learning-based solution to tackle the real-time gesture recognition of bimanual (two hands) gestures which is not well studied from the literature. To overcome the critical issue of hand-hand self-occlusion problem common in bimanual gestures, multiple cameras from diversified views are used. A tailored multi-camera system is constructed to acquire multi-views bimanual gesture data, and data from each view is then fed into a separate classifier for learning. Thus, to ensemble results from these classifiers, we proposed a weighted sum fusion scheme of results from different classifiers. The weightings are optimized according to how well the recognition performed of the particular view. Our experiments show multiple-view results outperform single-view results.

|

|

Packing Vertex Data into Hardware-decompressible Textures.

(TVCG 2017)

Kin Chung Kwan, Xuemiao Xu, Liang Wan, Tien-Tsin Wong, and Wai-Man Pang

Abstract

Most graphics hardware features memory to store textures and vertex data for rendering. However, because of the irreversible trend of increasing complexity of scenes, rendering a scene can easily reach the limit of memory resources. Thus, vertex data are preferably compressed, with a requirement that they can be decompressed during rendering. In this paper, we present a novel method to exploit existing hardware texture compression circuits to facilitate the decompression of vertex data in graphics processing unit (GPUs). This built-in hardware allows real-time, random-order decoding of data. However, vertex data must be packed into textures, and careless packing arrangements can easily disrupt data coherence. Hence, we propose an optimization approach for the best vertex data permutation that minimizes compression error. All of these result in fast and high-quality vertex data decompression for real-time rendering. To further improve the visual quality, we introduce vertex clustering to reduce the dynamic range of data during quantization. Our experiments demonstrate the effectiveness of our method for various vertex data of 3D models during rendering with the advantages of a minimized memory footprint and high frame rate.

|

|

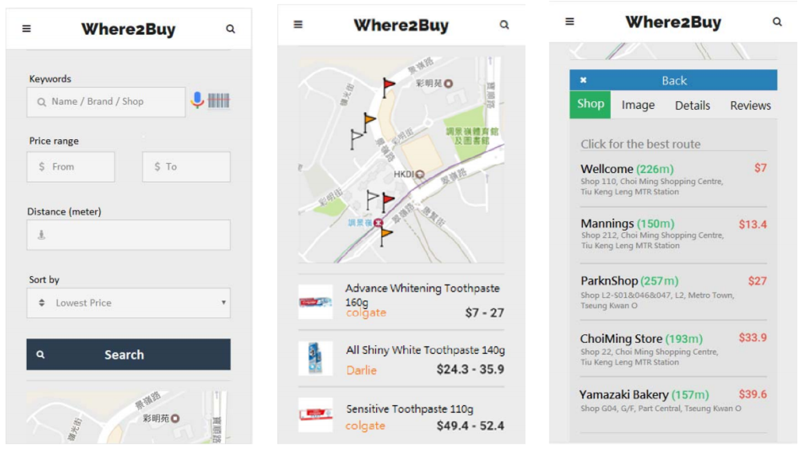

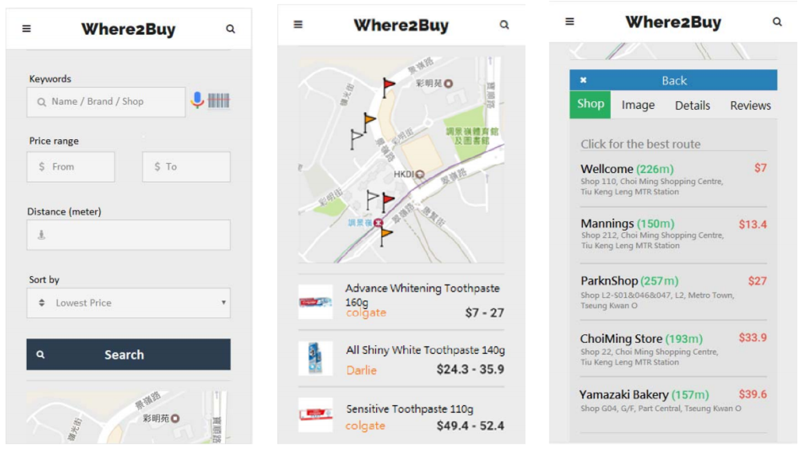

Where2Buy: A Location-Based Shopping App with Products-wise Searching

(IEEE Workshop on Interactive Multimedia Application and Design for Quality Living 2017)

Kin Chi Chan, Tak Leung Cheung, Siu Hong Lai, Kin Chung Kwan, Hoyin Yue and Wai-Man Pang

Abstract

It is usual for a consumer to search a product based on its category and go to related kind of shop to buy a product, e.g. food in supermarket, a pencil from a stationary shop and etc. While it is not uncommon nowadays for a shop to sell various categories of goods at the same time, like a newspaper stand do sell toys, an accessory shop has stationary. However, consumer may not easily notice and purchase these goods, especially if they are in hurry or not familiar with the shops nearby. With the emergence and popularity of many shopping search engines (shopbots), we can actually provide a better matching between the consumer and seller. In this paper, we developed a shopbot app system (Where2Buy) on smartphone that can search and filter the nearby shops which sell the desired products. To simplify the input process, our system allows users to search by text or voice and fuzzy matching is supported to widen the scope of searching. Detailed information of related product and shops are displayed in the result, together with a navigation map showing the best route to the target shops. If the desired product is not available nearby, substitutes in the same category will be recommended for the users. Our user study evidences that our system is simply and easy to use. More than half of the participants prefer Where2Buy than the other available shopbots in Hong Kong.

|

|

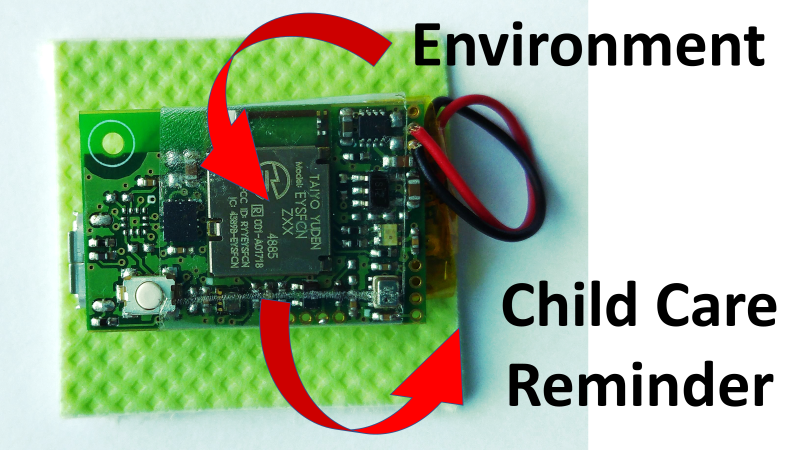

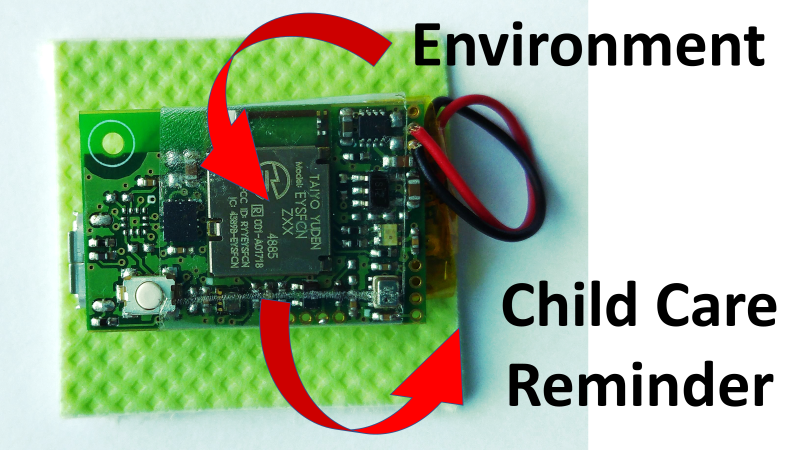

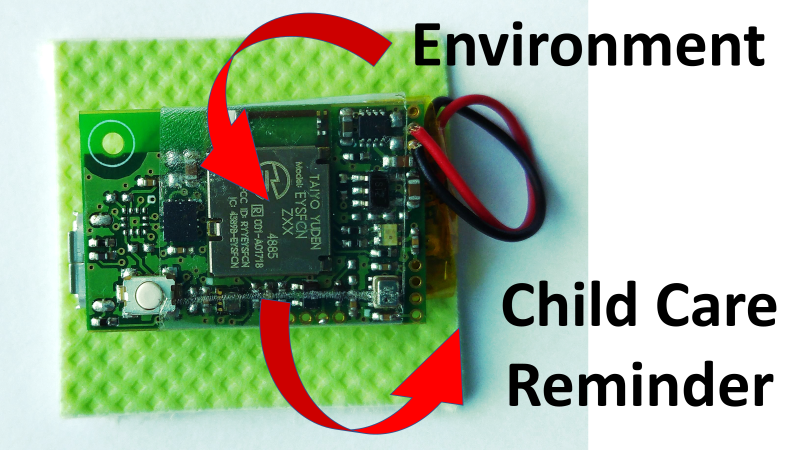

Towards Using Tiny Sensors with Heat Balancing Criteria for Child Care Reminders

(International Journal of Semantic Computing 2016)

Geoffrey Poon, Kin Chung Kwan, Wai-Man Pang and Kup-Sze Choi

Abstract

Raising children is challenging and requires lots of care. Parents always have to provide proper care to their children in time, like hydration and clothing. However, it is difficult to always stay alert or be aware of the care required at proper moments. One reason is that parents nowadays are busy. This especially applies to single parent, or the one who needs to raise multiple children. This paper presents the use of an integrated multi-sensors together with a mobile application to help keep track of unusual situations concerning a child. By monitoring the changes in surrounding temperature, motions, and air pressure acquired from the sensors, our mobile application can infer the physiological needs of the children with the heat equilibrium assumption. As the thermal environment in the human body is mainly governed by the heat balance equation, we fuse all available sensor readings to the equation so as to estimate the change in situation of a child over a certain period of time. Our system can then notify the parents of the necessary care, including hydration, dining, clothing and ear barotrauma relieving. The proposed application can greatly relieve some of the mental load and pressure of the parents in taking care of children.

|

|

Towards Using Tiny Multi-sensors Unit for Child Care Reminders

(IEEE BigMM 2016)

Geoffrey Poon, Kin Chung Kwan, Wai-Man Pang, and Kup-Sze Choi

Abstract

Raising children is challenging and requires lots of care. Parents always have to keep track of the status of their children, and provide proper care to them in time, like hydration, dinning, clothing, discomfort relieving, etc. However, it is always difficult to stay alert or be aware of the care required to the children at proper moments. One reason is that parents nowadays are busy, as they usually have to work outside and lack of skills in looking after their children. This especially applies to case that only one of the parents can take care of the child, or one needs to raise several children at the same time. This paper presents the use of an integrated multi-sensors unit together with a mobile application to help keeping track of unusual situations of a child. By monitoring the changes in the surrounding temperature, motions, and air pressure acquired from the sensors, our mobile application can detect the status of the children and notify the parents the necessary care to them. The care includes hydration, dinning, clothing and ear barotrauma relieving. The proposed mobile application can greatly relieve some of the mental load and pressure of the parents in taking care of children.

|

|

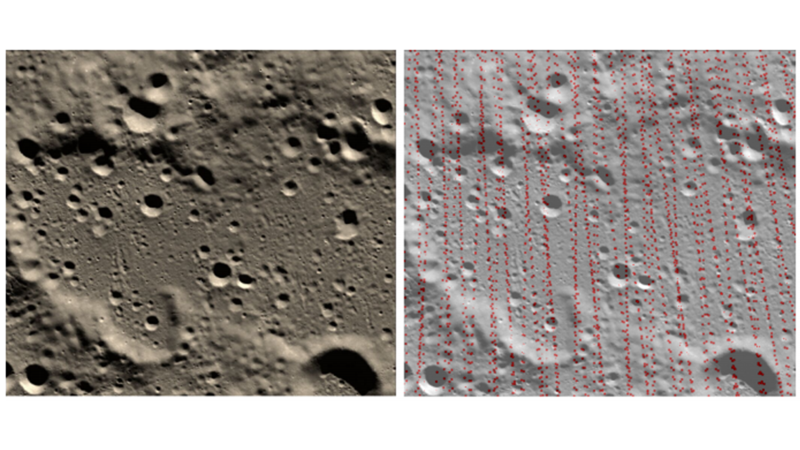

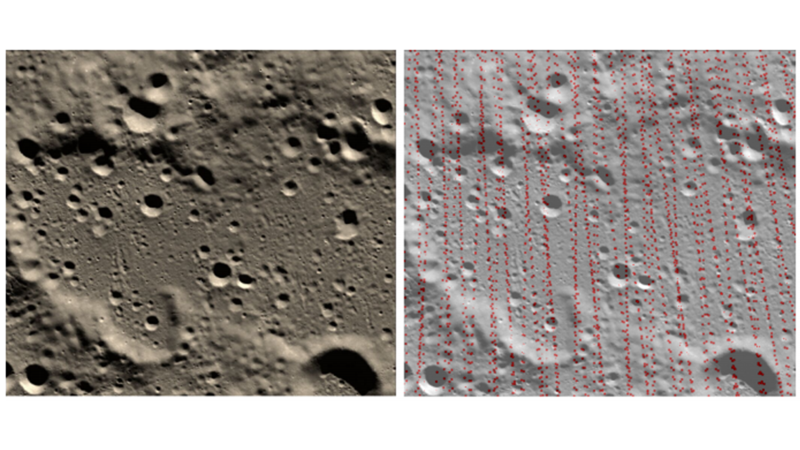

A Two-Phase Space Resection Model for Accurate Topographic Reconstruction from Lunar Imagery with Pushbroom Scanners

(Sensors 2016)

Xuemiao Xu, Huaidong Zhang,Guoqiang Han, Kin Chung Kwan, Wai-Man Pang, Jiaming Fang, and Gansen Zhao

Abstract

Exterior orientation parameters’ (EOP) estimation using space resection plays an important role in topographic reconstruction for push broom scanners. However, existing models of space resection are highly sensitive to errors in data. Unfortunately, for lunar imagery, the altitude data at the ground control points (GCPs) for space resection are error-prone. Thus, existing models fail to produce reliable EOPs. Motivated by a finding that for push broom scanners, angular rotations of EOPs can be estimated independent of the altitude data and only involving the geographic data at the GCPs, which are already provided, hence, we divide the modeling of space resection into two phases. Firstly, we estimate the angular rotations based on the reliable geographic data using our proposed mathematical model. Then, with the accurate angular rotations, the collinear equations for space resection are simplified into a linear problem, and the global optimal solution for the spatial position of EOPs can always be achieved. Moreover, a certainty term is integrated to penalize the unreliable altitude data for increasing the error tolerance. Experimental results evidence that our model can obtain more accurate EOPs and topographic maps not only for the simulated data, but also for the real data from Chang’E-1, compared to the existing space resection model.

|

|

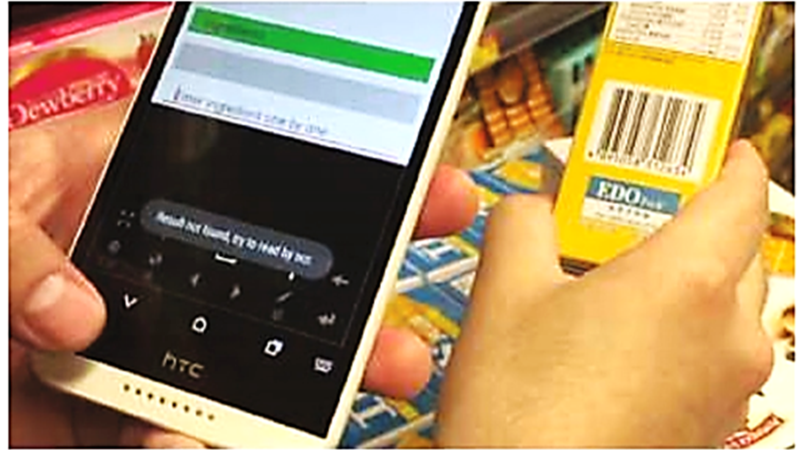

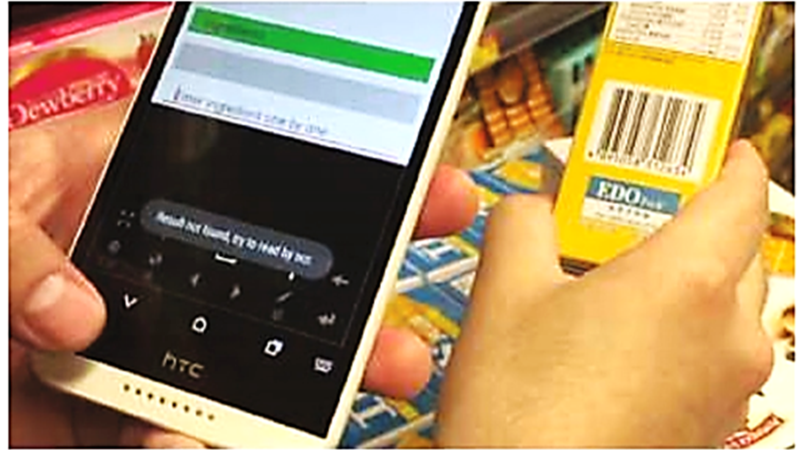

A Mobile Adviser of Healthy Eating by Reading Ingredient Labels

(MOBIHEALTH 2016)

Man Wai Wong, Qing Ye, Yuk Kai Chan Kylar, Wai-Man Pang, and Kin Chung Kwan

Abstract

Understanding ingredients or additives in food is essential for a healthy life. The general public should be encouraged to learn more about the effect of food they consume, especially for people with allergy or other health problems. However, reading the ingredient label of every packaged food is tedious and no one will spend time on this. This paper proposed a mobile app to leverage the troublesome and at the same time provide health advices of packaged food. To facilitate acquisition of the ingredient list, apart from barcode scanning, we recognize text on the ingredient labels directly. Thus, our application will provide proper alert on allergen found in food. Also it suggests users to avoid food that is harmful to health in long term, like high fat or calories food. A preliminary user study reveals that the adviser app is useful and welcomed by many users who care about their dietary.

|

|

Pyramid of Arclength Descriptor for Generating Collage of Shapes

(SIGGRAPH Asia 2016)

Kin Chung Kwan, Lok-Tsun Sinn Jimmy, Chu Han, Tien-Tsin Wong, and Chi-Wing Fu

Abstract

This paper tackles a challenging 2D collage generation problem, focusing on shapes: we aim to fill a given region by packing irregular and reasonably-sized shapes with minimized gaps and overlaps. To achieve this nontrivial problem, we first have to analyze the boundary of individual shapes and then couple the shapes with partially-matched boundary to reduce gaps and overlaps in the collages. Second, the search space in identifying a good coupling of shapes is highly enormous, since arranging a shape in a collage involves a position, an orientation, and a scale factor. Yet, this matching step needs to be performed for every single shape when we pack it into a collage. Existing shape descriptors are simply infeasible for computation in a reasonable amount of time. To overcome this, we present a brand new, scale- and rotation-invariant 2D shape descriptor, namely pyramid of arclength descriptor (PAD). Its formulation is locally supported, scalable, and yet simple to construct and compute. These properties make PAD efficient for performing the partial-shape matching. Hence, we can prune away most search space with simple calculation, and efficiently identify candidate shapes. We evaluate our method using a large variety of shapes with different types and contours. Convincing collage results in terms of visual quality and time performance are obtained.

|