| Week 1 |

|---|

|

[08.28.15] - Friday - First day!

|

|

- Some of the important topics we covered. -

|

|

Sections covered: 1.1 (started)

|

Reading for next time: Section 1.1. Focus on the following:

- the definitions, which are in bold,

- Examples 3, 4, and 5, and

- the geometric interpretation of linear systems with 2 or 3 unknowns (see Figures 1.1 and 1.2).

|

To discuss next time:

- What are the differences between the linear systems in Examples 3, 4, and 5 in terms of the set of solutions? Are there any similarities?

- Prove (or at least be prepared to discuss where you got stuck in proving) the following proposition:

Proposition. Let $a,b,c,d$ be arbitrary real numbers. If $ad-bc \neq 0$, then the homogeneous system

\[

\begin{array}{rcrcr}

ax & + &by & = & 0\\

cx & + & dy & = & 0

\end{array}

\]

has only the trivial solution.

|

| Week 2 |

|---|

|

[08.31.15] - Monday

Started with a great presentation by Eagle and many excellent comments from the audience! We then talked a bit about the differences between presenting proofs at the board and writing up proofs on paper. Here is a link to the written model that I presented today: Proof Model 1.

|

|

Sections covered: 1.1 (finished), 1.2 (started)

|

Reading for next time: Section 1.2. Focus on the following:

- the definitions, paying special attention to $\mathbb{R}^n$, linear combination, and traspose

- notation for matrices, e.g. $A = [a_{ij}]$

- summation notation

|

To discuss next time:

- When we write "let $A=[a_{ij}]$ be an $m\times n$ matrix," what is the difference between $A$ and $a_{ij}$?

- True or False: every vector in $\mathbb{R}^3$ can be written as a linear combination of the following three vectors: \[\begin{bmatrix}1\\0\\0\end{bmatrix}, \begin{bmatrix}0\\1\\0\end{bmatrix}, \begin{bmatrix}0\\0\\1\end{bmatrix}\]

|

[09.02.15] - Wednesday

Albatros presented an excellent solution to the discussion question about writing vectors in $\mathbb{R}^3$ as linear combinations of others. It was very clear and highlighted all of the important steps. Fantastic! We followed it up by digging further into questions about linear combinations, which will be a key concept for this course.

Also, another proof model was added to ShareLaTeX. It is here: Proof Model 2.

|

|

Sections covered: 1.2 (finished), 1.3 (started)

|

Reading for next time: Section 1.3. Focus on the following:

- the definitions, paying special attention to the definition of matrix multiplication (Def. 1.7)

- the discussion about a matrix-vector product (between examples 11 and 12)

- Example 11, 12, 13

|

To discuss next time:

- True or False: if $A$ and $B$ are $3\times 3$ matrices, then $AB=BA$.

- True or False: if $A$ and $B$ are $2\times 2$ matrices and $AB = 0_2$, then either $A = 0_2$ or $B = 0_2$. Note: here $0_2$ denotes the $2\times 2$ zero matrix, i.e. \[0_2 = \begin{bmatrix}0 & 0\\0 & 0\end{bmatrix}.\]

|

|

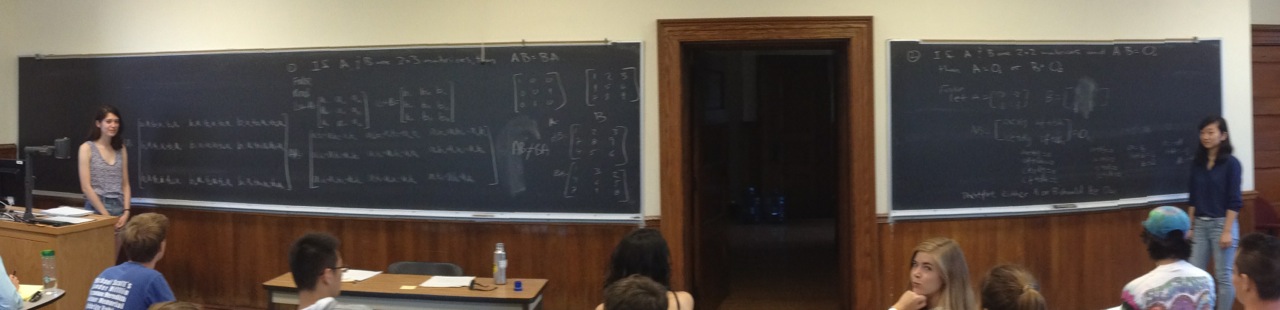

[09.04.15] - Friday

Two fantastic presentations today from Koala and Fox - great job! And great comments from the audience too!

- Koala and Fox -

|

|

Sections covered: 1.3 (finished)

|

Reading for next time: Section 1.4. Focus on the following:

- the theorems, which tell us that the matrix operations satisfy some familiar properties, e.g. commutativity of addition and associativity of addition and multiplication

- Examples 9 and 10 (and the blue boxes surrounding them), which tells us that some familiar properties are not true (in general)

|

To discuss next time:

- Bring in your typed up solution to #28(b) from 1.3; make sure that it is a formal write-up with all variables properly defined, correct punctuation, etc. Be prepared to talk through it on the document camera.

- Bring in your typed up solution to #43(a) from 1.3. Again, make sure that it is a formal write-up.

|

| Week 3 |

|---|

|

[09.07.15] - Monday

Started off with a nice discussion about the structure and goals for a formal proof (as opposed to a presentation of a proof). Many thanks to the two groups that presented their write-ups; they were excellent!!

|

|

Sections covered: 1.4

|

Reading for next time: Section 1.5 up through Example 12. You can skip the subsection on "Partitioned Matrices," though this idea can be really useful sometimes. Focus on the following:

- the definitions, paying special attention to invertible (nonsingular) and inverse

- Examples 11 and 12

|

To discuss next time:

- Give an example of a $2\times 2$ matrix that is not the zero matrix and is not invertible.

- If $A$ is an $n\times n$ diagonal matrix such that every entry on the main diagonal is nonzero, show that $A$ is invertible. Hint: to do this, you just have to write down what the inverse is and then check that it works. If you have trouble seeing it, try experimenting in the $2\times 2$ world first.

|

|

[09.09.15] - Wednesday

Finished up 1.4, and started talking about inverses. Two (or three, depending on how you count) great presentations today. Both were clear and very well laid out. There was a bit of confusion about the hypotheses for one of the questions, but in the end, it couldn't have worked out better!

|

|

Sections covered: 1.4 (finished), 1.5 (started)

|

Reading for next time: Section 1.5 from Theorem 1.6 through Example 14. Focus on the following:

- Theorem 1.6

- Examples 14

|

To discuss next time:

- Take another crack at this one: if $A$ is an $n\times n$ diagonal matrix such that every entry on the main diagonal is nonzero, show that $A$ is invertible. Hint: take an educated guess at what the inverse is (based on class today), and call it $B$. To show that $B$ is indeed the inverse, you just need to show that $AB = I$ and $BA = I$ (the remark between Definition 1.10 and Example 10 simplifies this further).

- Let $A = \begin{bmatrix} 2 & 3\\ 5 & 7\end{bmatrix}$. First, show that $B = \begin{bmatrix} -7 & 3\\ 5 & -2\end{bmatrix}$ is the inverse of $A$. Hint: you just need to show that $AB = I$. Second, use that $B = A^{-1}$ to quickly solve $\begin{bmatrix} 2 & 3\\ 5 & 7\end{bmatrix} \begin{bmatrix} x_1 \\ x_2\end{bmatrix} = \begin{bmatrix} 1\\ 1\end{bmatrix}$ (as in Example 14).

|

|

[09.11.15] - Friday

Had several nice presentations today that were followed by a lively discussion. Nice job!

|

|

Sections covered: 1.5

|

Reading for next time: Section 1.6. Focus on the following:

- the introduction

- Examples 1 and 2 and the discussion in between

|

To discuss next time:

- Bring in your typed up solution to #50 from 1.5; make sure that it is a formal write-up that is fully justified with correct punctuation. Be prepared to talk through it on the document camera.

- Bring in your typed up solution to AP #2. Again, make sure that it is a formal write-up.

|

| Week 4 |

|---|

|

[09.14.15] - Monday

Talked through two very nice proof write-ups at the start of class today. We had good conversation about the exposition as well as the math. Excellent!

|

|

Sections covered: 1.6 (started)

|

Reading for next time: Section 1.6. Focus on the following:

- Example 3

|

To discuss next time:

- Let $T:\mathbb{R}^2 \rightarrow \mathbb{R}^3$ be the transformation defined by \[T(\bar{v}) = \begin{bmatrix}1 & 3\\ 0 & 1\\ 2 & 2\end{bmatrix}\bar{v}.\]

- Is $\begin{bmatrix}-1\\ -3\\ 4\end{bmatrix}$ in the range of $T$? Is $\begin{bmatrix}2\\ 1\\ 0\end{bmatrix}$ in the range of $T$?

- Can you finish the following sentence? "The vector $\begin{bmatrix}a\\ b\\ c\end{bmatrix}$ is in the range of $T$ if and only if... (some equation involving $a$, $b$, and $c$)"

|

|

[09.16.15] - Wednesday

Great presentations today, but they would have been so much better if I hadn't talked so much...sorry! Many thanks to the presenters. But, audience members, ask more questions and make more comments! You were so quiet today.

|

|

Sections covered: 1.6 (finished), Logic (started)

|

Reading for next time:

- Read the first paragraph of this: https://math.berkeley.edu/~hutching/teach/proofs.pdf

- Hopefully the previous reading made you think, ``what's Gödel's Theorem?'' So now read this: http://www.scientificamerican.com/article/what-is-godels-theorem/. (And, if you want, check out the wikipedia article on Gödel's Incompleteness Theorems.)

|

To discuss next time:

- What is a proof?

- What is Gödel's Theorem saying, and does it bother you?

|

|

[09.18.15] - Friday

Started with a nice discussion about what is a proof. It definitely seems fuzzy...

|

|

Sections covered: Logic (continued)

|

|

Reading for next time: none.

|

To discuss next time:

- Bring in your typed up solution to #21 from Section 1.6; make sure that it is a formal write-up that is fully justified with correct punctuation. Be prepared to talk through it on the document camera.

- Construct a truth table to prove the "Law of Implication," which states that $p\implies q \equiv (\sim p)\vee q$.

|

| Week 5 |

|---|

|

[09.21.15] - Monday

Great presentations today. One proof write-up, which was so clean that we struggled to make comments, though a couple people saw something (great!), and one proof of a logical equivalence that was by the book. Nice job!

|

|

Sections covered: Logic

|

|

Reading for next time: none.

|

To discuss next time:

- Consider the following statement where $I$ denotes the $n\times n$ identity matrix.

"For every $n\times n$ matrix $A$, if there is a $c\in \mathbb{R}$ such that $A=cI$, then $AB = BA$ for every $n\times n$ matrix $B$."

Try to write the negation of the statement in as clear of an English statement as possible. (You do not need to determine if the statement is true or false...but you can if you want.)

|

|

[09.23.15] - Wednesday

|

|

Sections covered: Logic

|

Reading for next time:

- Start by reading Theorem 2.3 on page 96. It contains terms that we haven't defined yet, but it motivates Section 2.1.

- Look back, if needed, to remember how we change between linear systems and augmented matrices (see pg 27-28).

- Read Definitions 2.2 and 2.3 in Section 2.1, and then read Examples 3 and 4 in Section 2.1.

|

To discuss next time:

- Do you see similarities between the manipulations we do to solve linear systems (see blue box on page 6) and the elementary row operations? Differences?

- Consider the following linear system: \[\begin{align*}x_2 + x_3 &= 2\\x_1 + x_3 &= 1\\-3x_1 + 2x_2 &= 4\end{align*}\]

- Write the augmented matrix corresponding to this linear system.

- Show that the augmented matrix you found is row equivalent to \[ \left[\begin{array}{ccc|c}1 & 0 & 1& 1\\ 0 & 1 & 1& 2\\0 & 0 & 1& 3\end{array}\right].\] That is, use elementary row operations to transform the augmented matrix you found before into this one.

- Write out the linear system corresponding to this new augmented matrix.

|

|

[09.25.15] - Friday

|

|

Sections covered: Logic, 2.1 (started)

|

Reading for next time: Section 2.2. Focus on the following:

- Examples 3, 6, and 10

|

To discuss next time:

- Notice that, as in Example 6, you can stop the reduction process at an REF and solve the system with "back substitution", or you can continue the reduction process to RREF and have the solutions right there. Which one seems more efficient? Really think about this.

- Suppose you are trying to solve a homogeneous linear system of 3 equations in 4 unknowns. Explain (and don't just quote a theorem) why the system must have infinitely many solutions (regardless of what the system actually looks like).

- Is an arbitrary linear system of 3 equations in 4 unknowns guaranteed to have infinitely many solutions? Why or why not?

|

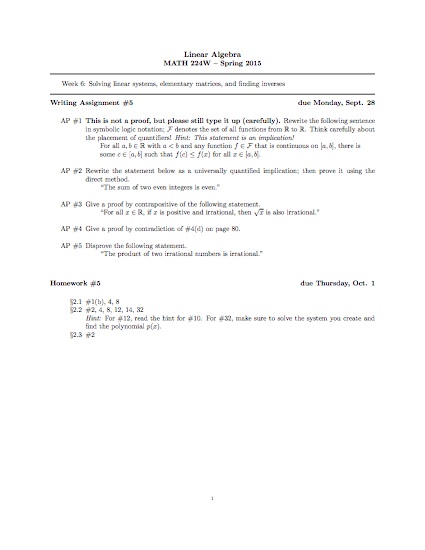

| Week 6 |

|---|

|

[09.28.15] - Monday

|

|

Sections covered: 2.2

|

|

Reading for next time: Start of Section 2.3, through Example 2. Also read Lemma 2.1.

|

To discuss next time: let's make sense of Lemma 2.1. Let $A$ be an $n\times n$ matrix.

- We want to show that if $A\mathbf{x} = \mathbf{0}$ has only the trivial solution, then $A$ is row equivalent to the identity. Suppose $A\mathbf{x} = \mathbf{0}$. Using the language of pivots and free variables, find the RREF of $\left[\begin{array}{c|c}A & 0\end{array}\right]$. What does this mean about the RREF of $A$?

- Is the converse true? That is, is it true that if $A$ is row equivalent to the identity, then $A\mathbf{x} = \mathbf{0}$ has only the trivial solution?

|

|

[09.30.15] - Wednesday Two very nice presentations today, but we really have to get the audience to wake up!

|

|

Sections covered: 2.2 (finished), 2.3 (started)

|

Reading for next time: Section 2.3 - Finding $A^{-1}$. Focus on the following:

- pg. 121

- Examples 5 and 6

|

To discuss next time: Let $A$ be an arbitrary $2\times 2$ upper triangular matrix, i.e. \[A=\begin{bmatrix}a & b\\ 0 & c\end{bmatrix}\]

- Complete the following sentence: $A$ is invertible if and only if... (insert some condition on $a$, $b$, and $c$)

- What if $A$ had been an arbitrary $n\times n$ upper triangular matrix? Complete the following sentence for the $n\times n$ case: $A$ is invertible if and only if... (insert some condition on the entries of $A$)

- Find an expression (in the $2\times 2$ case) for $A^{-1}$ in terms of $a$, $b$, and $c$, when it exists.

|

|

[10.02.15] - Friday Great presentations today about upper triangular matrices and invertibility. This is a very good result to remember.

|

|

Sections covered: 2.3 (finished), 3.1 (started)

|

Reading for next time: Section 3.1. Focus on the following:

- Intro. through Example 5. (For the record, I find Example 1 confusing, which motivated the discussion questions below.)

|

To discuss next time:

- How do you visualize a permutation? If you're at a loss, try googling "permutation."

- How many permutations are there of the set $\{1,2,3,4\}$, i.e. how many ways are there to arrange the numbers $1,2,3,4$? (Yes, the answer is in the book. But, I want each of you to understand it and be able to clearly explain it.)

- Is the permutation 4321 even or odd? Be able to identify the inversions.

|

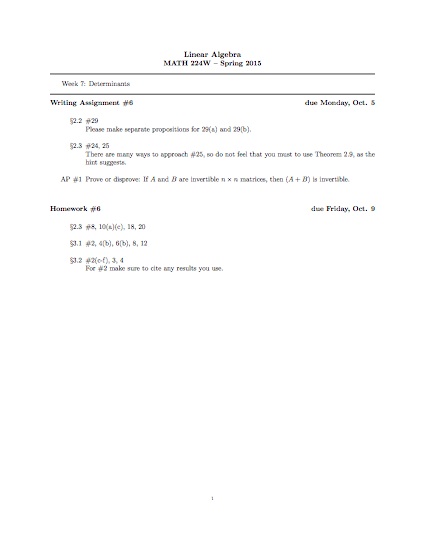

| Week 7 |

|---|

|

[10.05.15] - Monday

|

|

Sections covered: 3.1

|

Reading for next time: Section 3.2. Focus on the following:

- Read the statements of the Theorems, but you can skip the proofs for now.

|

To discuss next time: let's make a review sheet for the midterm.

- Please construct one question of the form "provide a specific example which satisfies the given description, or if no such example exists, briefly explain why not" and add it to our review sheet here

https://www.sharelatex.com/project/5613264e9c807a8a65e40164

The review sheet already contains a question I made that you can use as an example.

Make sure to

- put your initials next to your questions, and

- put an answer in the "Answers" section of the document (again, I provided an example).

- Repeat with a T/F question.

- Make sure to write down your questions and answers on paper too, so you are prepared to present them in class.

|

|

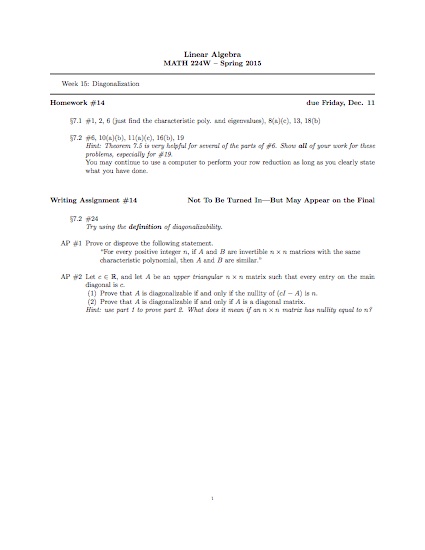

[10.07.15] - Wednesday Nice job creating review questions, and surprisingly, there were very few duplicates. Here's (a very slightly edited version of) what you made:

Exam1ReviewQuestions.pdf

Happy studying!

|

|

Sections covered: 3.2 (started)

|

|

Reading for next time: None. Focus on studying.

|

|

To discuss next time: The exam.

|

|

[10.09.15] - Friday Wrapped up determinants today, for the most part. On to vector spaces!

|

|

Sections covered: 3.2, 3.3 (started)

|

Reading for next time: Section 3.3. Focus on the following:

- Example 2 in Section 3.3.

|

To discuss next time: Properties of the determinant.

- Let $A\in M_{n\times n}$, and assume that $A$ is invertible with $A=A^{-1}$. What are the possible values for $|A|$?

- Let $A\in M_{n\times n}$, and assume that $|A-I_n| = 0$. Explain why there is some nontrivial $v\in \mathbb{R}^n$ such that $Av = v$. Hint: Invertibility Theorem.

|

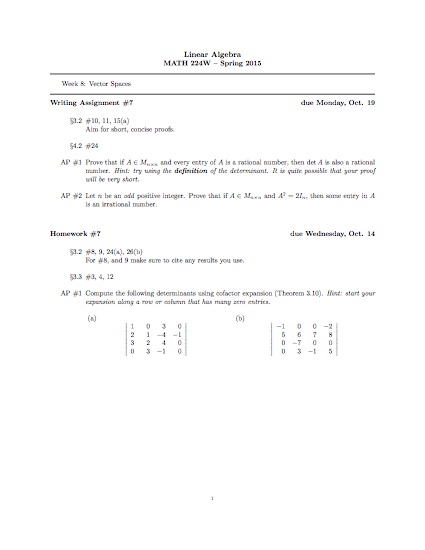

| Week 8 |

|---|

|

[10.12.15] - Monday

|

|

Sections covered: 3.3 (finished), 4.2 (started)

|

Reading for next time: Section 4.2. Focus on the following:

- Examples 5, 6, 11 (and the blue box following Example 11)

- The proof of Theorem 4.2(a)

|

To discuss next time:

- Let $V$ be the set of all $2\times 2$ matrices (with entries from $\mathbb{R}$) that have determinant $0$. Define $\oplus$ to be ordinary matrix addition, and $\odot$ to be ordinary scalar multiplication. Is $V$ closed under addition? Is $V$ closed under scalar multiplication?

- Digest the proof of Theorem 4.2(a). Use a similar idea to prove Theorem 4.2(b).

|

|

[10.14.15] - Wednesday

|

|

Sections covered: 4.2

|

Reading for next time: Section 4.3. Focus on the following:

- Definition 4.5 through Theorem 4.3 (put your focus on Theorem 4.3)

- Example 4

|

To discuss next time:

- Bring in your typed up solution to #24 from 4.2; make sure that it is a formal write-up that is fully justified with correct punctuation. Be prepared to talk through it on the document camera.

- We learned that $M_{2\times 2}$ is a vector space. Let $W$ be the subset of $M_{2\times 2}$ consisting of the $2\times 2$ matrices whose trace is $0$, i.e. $W=\{A \in M_{2\times 2} \,|\, \operatorname{tr}(A) = 0\}$. Explain why $W$ is a subspace of $M_{2\times 2}$.

|

|

[10.16.15] - Friday Fall Break!

|

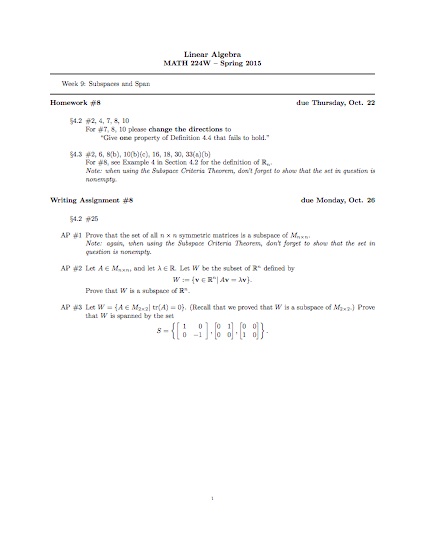

| Week 9 |

|---|

|

[10.19.15] - Monday Excellent discussion around an excellent presentation of a write-up for #24 from 4.2. Nice job!

|

|

Sections covered: 4.2 (finished), 4.3 (started)

|

Reading for next time: Section 4.3. Focus on the following:

- Example 6 and Definition 4.6

|

To discuss next time:

- Carry over from last time... We learned that $M_{2\times 2}$ is a vector space. Let $W$ be the subset of $M_{2\times 2}$ consisting of the $2\times 2$ matrices whose trace is $0$, i.e. $W=\{A \in M_{2\times 2} \,|\, \operatorname{tr}(A) = 0\}$. Explain why $W$ is a subspace of $M_{2\times 2}$.

- Digest Example 6 (and Definition 4.6), and be able to explain the following: if $V$ is a vector space, $v_1,v_2\in V$, and $W$ is the set of all linear combinations of $v_1$ and $v_2$, then $W$ is a subspace of $V$.

|

|

[10.21.15] - Wednesday

|

|

Sections covered: 4.3

|

Reading for next time: Section 4.4. Focus on the following:

- Definition 4.7

- Example 1 and 2

- Theorem 4.4

|

To discuss next time:

- Be able to write on the board, from memory, definitions of the following:

- a linear combination of vectors $v_1,\ldots,v_k \in V$

- $\operatorname{span}\{v_1,\ldots,v_k\}$

I may call on multiple people for the same definition!

- Describe (algebraically and geometrically) the span of the following set of vectors in $\mathbb{R}^3$ \[\left\{\begin{bmatrix}\pi\\0\\0 \end{bmatrix}, \begin{bmatrix}0\\0\\e \end{bmatrix}\right\}\]

|

|

[10.23.15] - Friday

|

|

Sections covered: 4.4 (started)

|

Reading for next time: Section 4.4. Focus on the following:

- Examples 7 and 10

|

To discuss next time:

- Bring in your typed up solution to AP #3; make sure that it is a formal write-up that is fully justified with correct punctuation. Be prepared to talk through it on the document camera.

- Be able to write on the board, from memory, definitions of the following:

- a linear combination of vectors $v_1,\ldots,v_k \in V$

- $\operatorname{span}\{v_1,\ldots,v_k\}$

I may call on multiple people for the same definition!

- Describe (algebraically and geometrically) the span of the following set of vectors in $\mathbb{R}^3$ \[\left\{\begin{bmatrix}\pi\\0\\0 \end{bmatrix}, \begin{bmatrix}0\\0\\e \end{bmatrix}\right\}\]

|

| Week 10 |

|---|

|

[10.26.15] - Monday Another great discussion around a formal proof write-up. The math as well as the exposition were already excellent, so we had the opportunity to really fine-tune things. I was really impressed with the comments. Nice job all around!

|

|

Sections covered: 4.4 (finished)

|

Reading for next time: Section 4.5. Focus on the following:

- Definition 4.9

- Examples 2, 3, 6

|

To discuss next time: Let $\mathbf{u} = \begin{bmatrix} 1\\ 2\\ \pi \end{bmatrix}$.

- Are $\mathbf{u}$ and $\mathbf{0}$ (the zero vector in $\mathbb{R}^3$) linearly dependent?

- Find a nonzero vector $\mathbf{v}\in \mathbb{R}^3$ for which $\mathbf{u}$ and $\mathbf{v}$ are linearly dependent.

- Can you find all vectors $\mathbf{v}\in \mathbb{R}^3$ for which $\mathbf{u}$ and $\mathbf{v}$ are linearly dependent?

|

|

[10.28.15] - Wednesday Great presentations today! Thanks to everyone who went to the board and all those that participated in the discussion.

|

|

Sections covered: 4.5 (started)

|

Reading for next time: Section 4.6. Focus on the following:

- Definition 4.10

- Example 1

|

To discuss next time:

- Is $\left\{ \begin{bmatrix} 1\\ 0\\ 0 \end{bmatrix}, \begin{bmatrix} 0\\ 1\\ 0 \end{bmatrix}\right\}$ a basis for $\mathbb{R}^3$? Why or why not?

- Is $\left\{ \begin{bmatrix} 1\\ 0\\ 0 \end{bmatrix}, \begin{bmatrix} 0\\ 1\\ 0 \end{bmatrix}, \begin{bmatrix} 0\\ 0\\ 1 \end{bmatrix}, \begin{bmatrix} 1\\ 1\\ 1 \end{bmatrix}\right\}$ a basis for $\mathbb{R}^3$? Why or why not?

- Is $\left\{ \begin{bmatrix} 1\\ 0\\ 0 \end{bmatrix}, \begin{bmatrix} 1\\ 1\\ 0 \end{bmatrix}, \begin{bmatrix} 1\\ 1\\ 1 \end{bmatrix}\right\}$ a basis for $\mathbb{R}^3$? Why or why not?

|

|

[10.30.15] - Friday Another great day of presentations and discussion!

|

|

Sections covered: 4.5 (finished), 4.6 (started)

|

Reading for next time: Section 4.6. Focus on the following:

- Definition 4.10

- Examples 2 and 4

|

To discuss next time: Let $\mathbf{v}_1, \mathbf{v}_2, \mathbf{v}_3, \mathbf{v}_4$ be vectors in a vector space $V$ such that $\mathbf{v}_4 = a_1\mathbf{v}_1 + a_2\mathbf{v}_2 + a_3\mathbf{v}_3$ for some $a_1, a_2, a_3 \in \mathbb{R}$.

- Now assume $\mathbf{w} = b_1\mathbf{v}_1 + b_2\mathbf{v}_2 + b_3\mathbf{v}_3 + b_4\mathbf{v}_4$ for some $b_1, b_2, b_3, b_4 \in \mathbb{R}$. Show that $\mathbf{w}$ is a linear combination of $\mathbf{v}_1, \mathbf{v}_2, \mathbf{v}_3$.

- Explain why this shows that \[\mathbf{v}_4 \in \operatorname{span}\{\mathbf{v}_1, \mathbf{v}_2, \mathbf{v}_3\}\implies \operatorname{span}\{\mathbf{v}_1, \mathbf{v}_2, \mathbf{v}_3, \mathbf{v}_4\} = \operatorname{span}\{\mathbf{v}_1, \mathbf{v}_2, \mathbf{v}_3\}.\]

|

| Week 11 |

|---|

|

[11.02.15] - Monday

|

|

Sections covered: 4.6 (continued)

|

Reading for next time: Section 4.6. Focus on the following:

- Blue box on page 235

- Example 6

|

To discuss next time:

- Let $v_1 = \begin{bmatrix} 1\\ -1\\ 1\\ 0 \end{bmatrix}$, $v_2 = \begin{bmatrix} 2\\ -1\\ -1\\-1 \end{bmatrix}$, $v_3 = \begin{bmatrix} 3\\ -6\\ 12\\ 3 \end{bmatrix}$, and $v_4 = \begin{bmatrix} 4\\ -3\\ 2\\-\frac{1}{2} \end{bmatrix}$. Follow the process in the blue box on page 235 to find a basis for $W = \operatorname{span}\{v_1, v_2, v_3, v_4\}$.

|

|

[11.04.15] - Wednesday

|

|

Sections covered: 4.6 (finished)

|

Reading for next time: Section 4.8. Focus on the following:

- Introduction

- Example 1 and 2

|

To discuss next time:

- Let $B_1$ be the following ordered basis for $P_2$: $B_1 = \{t^2,t,1\}$.

- Explain why $\left[3t^2 + 2\right]_{B_1} = \begin{bmatrix} 3\\ 0\\ 2 \end{bmatrix}$.

- Let $B_2$ be the following ordered basis for $P_2$: $B_2 = \{1,t,t^2\}$.

- Explain why $\left[3t^2 + 2\right]_{B_2} = \begin{bmatrix} 2\\ 0\\ 3 \end{bmatrix}$.

- Let $B_3$ be the following ordered basis for $P_2$: $B_3 = \{t^2+t+1,t^2+t,t^2\}$.

- Explain why $\left[3t^2 + 2\right]_{B_3} = \left[\begin{array}{r} 2\\ -2\\ 3 \end{array}\right]$.

|

|

[11.06.15] - Friday

|

|

Sections covered: 4.8 (started)

|

Reading for next time: Section 4.8. Focus on the following:

- Introduction to transition matrices (pages 261-262)

- Example 4

|

To discuss next time:

- Bring in your typed up solution to AP #1; make sure that it is a formal write-up that is fully justified with correct punctuation. Be prepared to talk through it on the document camera. Note: that the assignment sheet has been updated; a little extra help was added to AP #3

|

| Week 12 |

|---|

|

[11.09.15] - Monday

|

|

Sections covered: 4.8 (finished)

|

Reading for next time: Section 4.9. Focus on the following:

- Intro through Example 1

|

To discuss next time:

- Give an example of a $3 \times 4$ matrix for which the row space has dimension 2. (You can make your example as simple as you like.)

- What is the dimension of the column space of your example?

- Is it possible to find a $3 \times 4$ matrix for which the row space has dimension 4?

- Is it possible to find a $3 \times 4$ matrix for which the column space has dimension 4?

|

|

[11.11.15] - Wednesday

|

|

Sections covered: 4.7, 4.9 (started)

|

Reading for next time: Section 4.9. Focus on the following:

- Theorem 4.18 (and the discussion following the proof that defines rank)

- Theorem 4.19 (the Rank-Nullity Theorem)

|

To discuss next time:

- If $A$ is an $m\times n$ matrix, explain why $\operatorname{rank} A \le \operatorname{minimum}(m,n)$.

- If $A$ is a $5\times 7$ matrix, explain why there is a nontrivial solution to $A\mathbf{x} = \mathbf{0}$. Try to use the Rank-Nullity Theorem (and part 1).

|

|

[11.13.15] - Friday

|

|

Sections covered: 4.9 (finished)

|

Reading for next time: Section 6.1. Focus on the following:

- Definition 6.1

- Examples 1, 4

- Theorem 6.2 as well as the paragraphs immediately before and immediately after it.

|

To discuss next time:

- Bring in your typed up solution to #40 from Section 4.6; make sure that it is a formal write-up that is fully justified with correct punctuation. Be prepared to talk through it on the document camera.

|

| Week 13 |

|---|

|

[11.16.15] - Monday

|

|

Sections covered: 6.1 (started)

|

Reading for next time: Section 6.1. Focus on the following:

- Theorem 6.3 and the paragraph preceding it.

- Examples 11

|

To discuss next time: let's make a review sheet for the midterm. Same directions as before, but with a new link...

- Please construct one question of the form "provide a specific example which satisfies the given description, or if no such example exists, briefly explain why not" and add it to our review sheet here

https://www.sharelatex.com/project/564a2fa3b726d15f4ab3db26

- put your initials next to your questions, and

- put an answer in the "Answers" section of the document.

- Repeat with a T/F question.

- Make sure to write down your questions and answers on paper too, so you are prepared to present them in class.

|

|

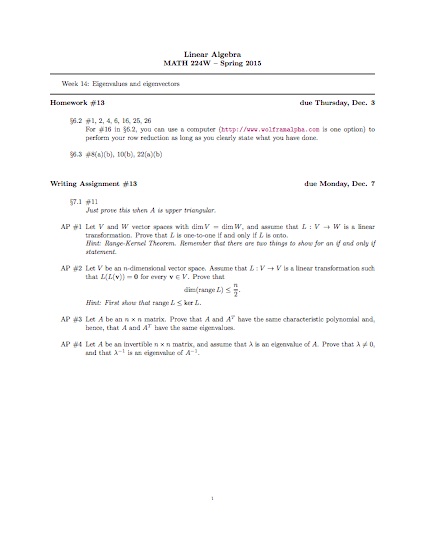

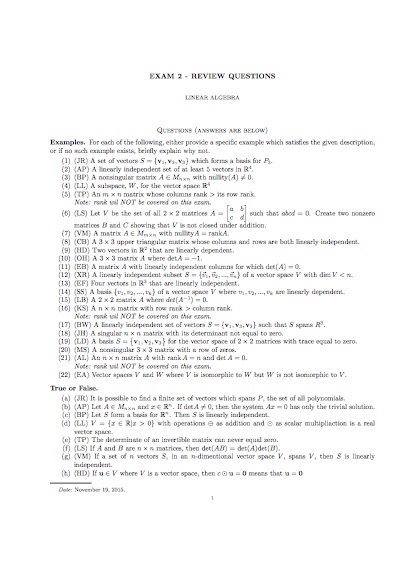

[11.18.15] - Wednesday Another nice job creating review questions! Here's (a very slightly edited version of) what you made:

Exam2ReviewQuestions.pdf

Let me know if you find any errors. Happy studying!

|

|

Sections covered: 6.1 (finished), 6.2 (started)

|

|

Reading for next time: None. Focus on studying.

|

|

[11.20.15] - Friday

|

|

Sections covered: 6.2

|

Reading for next time: Section 6.3. Focus on the following:

- Introduction

- Example 1

|

To discuss next time:

- We have seen that "taking the derivative" is a linear transformation. That is, for every positive $n$, the function $L:P_n\rightarrow P_n$ defined by $L(p(t)) = p'(t)$ is a linear transformation. Section 6.2 is about finding a way to view $L$ as a matrix transformation.

- Let $p(t) =a + bt + ct^2$. Compare $p'(t)$ with $\begin{bmatrix}0 & 1 & 0\\0 & 0 & 2\\0 & 0 & 0 \end{bmatrix}\begin{bmatrix}a\\b\\c \end{bmatrix}$.

- Let $p(t) =a + bt + ct^2 + dt^3$. Compare $p'(t)$ with $\begin{bmatrix}0 & 1 & 0 & 0\\0 & 0 & 2 & 0\\0 & 0 & 0 & 3\\0 & 0 & 0 & 0\end{bmatrix}\begin{bmatrix}a\\b\\c\\d \end{bmatrix}$.

- Can you generalize the above situation? What matrix would you use to compute the derivative of $p(t)= a_0 + a_1t + a_2t^2 + \cdots a_nt^n$? Why?

|

| Week 14 |

|---|

|

[11.30.15] - Monday

|

|

Sections covered: 6.2 (finished), 6.3 (started)

|

Reading for next time: Section 7.1. Focus on the following:

- Intro through Definition 7.1

|

To discuss next time:

- Let $L:\mathbb{R}^2\rightarrow\mathbb{R}^2$ be defined by $L\left(\begin{bmatrix}x\\y\end{bmatrix}\right) = \begin{bmatrix}y\\x\end{bmatrix}$

- Find all $\mathbf{v} \in \mathbb{R}^2$ such that $L(\mathbf{v}) = \mathbf{v}$. Can you also explain this geometrically (by thinking about $L$ geometrically)?

- Find all $\mathbf{v} \in \mathbb{R}^2$ such that $L(\mathbf{v}) = -\mathbf{v}$. Can you explain this geometrically as well?

- Find all $\mathbf{v} \in \mathbb{R}^2$ such that $L(\mathbf{v}) = 2\mathbf{v}$. Can you explain this geometrically?

|

|

[12.02.15] - Wednesday

|

|

Sections covered: 6.3 (finished), 7.1 (started)

|

Reading for next time: Section 7.1. Focus on the following:

- The definitions of eigenvalue and eigenvector of a matrix in the middle of page 442

- Example 10

|

To discuss next time:

- Be able to reproduce (on the board and in words) the definition of an eigenvalue of a matrix.

- Assume that $\lambda$ is an eigenvalue of an invertible matrix $A$. Can you explain why $\lambda\neq 0$?

- After reading Example 10, can you explain why $\lambda$ is an eigenvalue of a matrix $A \in M_{n\times n}$ if and only if the equation $(\lambda I_{n} - A)\mathbf{x} = \mathbf{0}$ has a nontrivial solution?

|

|

[12.04.15] - Friday

|

|

Sections covered: 7.1 (continued)

|

Reading for next time: Page 410. Focus on the following:

- The definition of similar matrices on page 410

|

To discuss next time:

- "Similar matrices are similar." Let $A,B \in M_{n\times n}$, and assume $B$ is similar to $A$, i.e. $B = P^{-1}AP$ for some invertible matrix $P$.

- Explain why $\operatorname{Tr}(A) = \operatorname{Tr}(B)$? Hint: use properties of the trace.

- Explain why $\det(A) = \det(B)$? Hint: use properties of the determinant.

- Can you think of other things that may be the same for similar matrices?

- Explain why $B^k = P^{-1}A^kP$ for any positive integer $k$. Hint: to see what's going on, start with $k=2$, and try to simplify $B^2 = (P^{-1}AP)^2$

|

| Week 15 |

|---|

|

[12.07.15] - Monday

|

|

Sections covered: 7.1 (finished)

|

Reading for next time: Section 7.2. Focus on the following:

- Intro through Definition 7.3

- The definition of multiplicity on page 459

- Theorem 7.3, Theorem 7.4, and the remark following Theorem 7.4

- Example 2

|

To discuss next time: Consider the following martices: \[A=\begin{bmatrix}1 & 0 & 0\\3 & 2 & 0\\ 0 & 0 & 1\end{bmatrix}\quad\quad B = \begin{bmatrix}1 & 0 & 0\\3 & 1 & 0\\ 0 & 0 & 2\end{bmatrix} \]

- Find the characteristic polynomials for $A$ and $B$. (They are the same.)

- Find a basis for $E_1(A)$, i.e. the eigenspace of A associated to $\lambda=1$.

- Find a basis for $E_2(A)$, i.e. the eigenspace of A associated to $\lambda=2$.

- Show that when the basis for $E_1(A)$ is combined with the basis for $E_2(A)$, you get a basis for $\mathbb{R}^3$.

- Find a basis for $E_1(B)$. Compare $\dim E_1(A)$, $\dim E_1(B)$, and the multiplicity of $\lambda=1$ (see page 459).

|

|

[12.09.15] - Wednesday

|

|

Sections covered: 7.2 (started)

|

|

Reading for next time: Finish reading Section 7.2, and think about reviewing for the final.

|

To discuss next time: Let's make a review sheet for the final exam. Focus on the new material. Same directions as before, but with a new link...

- Please construct one question of the form "provide a specific example which satisfies the given description, or if no such example exists, briefly explain why not" and add it to our review sheet here

https://www.sharelatex.com/project/5668781f90d2888d6f95071b

- put your initials next to your questions, and

- put an answer in the "Answers" section of the document.

- Repeat with a T/F question.

- Make sure to write down your questions and answers on paper too, so you are prepared to present them in class.

|